The augmented reality is not new for Google. In fact, it is a segment in which they have wanted to play their foot for more than a decade. Glass It was the project that made us dream of glasses with which we could obtain all the information we would like directly from our retina. The problem is that Technology was simply not ready At that time.

With the arrival of the generative AI, the balance has ended up balancing a lot. During The Google I/O.the company showed a prototype of glasses that reminded us of Google Glass, now driven with Gemini. Us We have been able to test this same prototype During our visit to the event and under these lines we tell you what we have found. Notice for navigators: they are still a prototype, so Google’s mission has been to collect feedback through closed -door tests.

Glass lacked two keys: an AI like Gemini and the technology we have today

Google’s return to the field of smart glasses seems to be more alive than ever, especially after knowing the development of the platform Android XR and his collaboration with Samsung and Qualcomm for the creation of Project Moohanthe augmented reality glasses that have the objective of standing in the Apple’s proposal with Vision Pro.

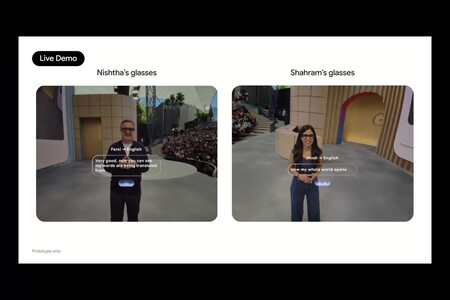

Real -time translation can become one of the functions most demanded by these types of devices | Image: Google

The company seems to come back with A renewed approachimportant strategic alliances and a much more mature technology. Android XR wants to spread through glasses of multiple formats And during the event we have been able to get back a bit with their glasses designed to wear them throughout the day.

Google and Samsung They have not hidden with design Project Moohan and have not done so with these glasses either. This discreet approach seems inspired by the recent collaboration of Goal with Ray-Banand it is a clear recognition that smart glasses need to be, above all, glasses that people want to wear.

The test (with failures) during the Keynote of the Google I/O | Image: Google

During the test with this prototype I could check How Google has integrated its artificial intelligence assistant Gemini in a format that takes advantage of the first person vision. Unlike the original Glass proposal, where technology was the protagonist, here the experience flows more naturally. We would have loved to show you the interface during the test or a closest close -up of the glasses themselves, but unfortunately were some of the restrictions we had to meet.

Sergey Brin himself, Google co -founder, recognized during the event the mistakes made with Google Glass, admitting that then They did not understand the complexity of supply chains in consumer electronics or how difficult it would be to make smart glasses at a reasonable price.

The I see what you see

Gemini It develops really well While we interact with the assistant through the glasses. The wizard uses the camera incorporated in the glasses to ‘see what we see’. In one of the demos, I stopped in front of a painting and directly asked who his author was and what he represented. Without having to specify to which table I referred to, the assistant responded precisely by providing data on the work and other related details of interest.

Prototype glasses in the test with Gemini | Image: Xataka

In another of the tests, I could also taste slightly Its potential capacity to help us with any type of taskasking him about the operation of a coffee machine in front of me. The wizard not only identified the model, but detailed the step -by -step procedure to prepare coffee.

Although all the tests were in a safe environment where Google workers had control in case something strange happened, these tests implied me Everyone of possibilities that Google counted thanks to Gemini and a technology that is now ‘.

Gemini drives device capabilities | Image: Google

This ability to “see” and understand the context makes a substantial difference with respect to attendees who only depend on voice or text commands. It’s like having a partner who observes the world from your perspective and helps you when you need it. A ‘todologist’ that helps you in whatever. And that concept of direct aid has also been extended in other projects that Google has underway and that we could also see on Google I/O, as is the case of Search Live.

In the current state in which this prototype was, the glasses had A touch button on one of the pins that allows you to activate or pause Geminiso that the assistant is not constantly listening and observing. In addition, when the camera is active, an LED lights so that people around you know that you are recording.

This is the prototype on the demos | Image: Xataka

The information was projected from one of the crystals, and everything we heard from Gemini could also see it in text. In the current state, the text is something uncomfortable to read, and that I have no view problems. However, we must remember that It is a very premature prototypeso it is a characteristic that surely changes a lot during the development cycle. And it should be remembered that Android XR is beginning to develop this year for this new concept of glasses, as the company has revealed during its Keynote.

A strategy of alliances and price will be everything to determine its success

What also makes this project of previous attempts distinguish is The collaboration strategy What Google is deploying. The company announced during the I/O associations with glasses manufacturers As Gentle Monster and Warby Parker, in addition to deepening their technological alliance with Samsung and Qualcomm. Google is investing up to 150 million dollars In his association with Warby Parker.

Despite the enthusiasm, you have to be realistic: What I could try was a very early prototype with limited functionalities and in a controlled environment. The demos focused on very specific use cases, so everything indicates that they still lack a lot of travel. With Project Moohan, the thing is much more mature, since the intention is for the helmet to leave this same 2025.

We will have to see above all price and how Gemini responds In more complex real -world situations. It also remains to be discovered how Android XR is taking, a platform that still lacks its own identity, but that is little by little we are seeing what it is capable of.

Cover image | Xataka

GIPHY App Key not set. Please check settings