The career of whom he manufactures The most capable smartphone It will not be alone in its components: AI has become an essential absolute To win this battle. Gemini He is the absolute protagonist, both in Android and in an iOS in which The presence of AI is insignificant. A complete and capable assistant, with the requirement of being permanently connected to the Internet.

Google, Gemini’s mother, has decided Give 100% local models a chance in Android. Including those of its competitors. I, who had never executed a local AI on a PC, I have been able to achieve it on my phone in five minutes.

10 Google applications that could have triumphed

Installing Google ai Edge Gallery

Google has released an open source app so that any user can interact with multimodal AI models. It is completely free, has no publicity, and its only limitation is that it is not published in Google Play Store. You have to Download it from github.

It is as easy as clicking on the link and downloading the file, which weighs 115 MB. Once you have downloaded it, click on it and install like any other app. Depending on the customization layer you use and the browser from which you download it, it is possible that the system asks for the occasional permission. Give it without fear, since it is a clean Google app.

Once installed, the app will be in the application drawer or desktop of your phone, depending on your layer. Just open it and download the available models to start using it.

Using the local AI in my Android

This application allows you to use four predetermined models of the local AI:

- GEMMA-3N-E2B-IT-Int4

- GEMMA-3N-E4B-IT-IN4

- GEMMA3-1B-IT-Q4

- Qwen2.5-1.5b-Instruct Q8

Each of these names is very likely to sound to Chinese, but there is a very simple summary. Gemma are the Google models, and the number behind “E2B, E4B, 1b”, refers to the parameter number of each model (2 billion, 4,000 million, 1,000 million). This means that, in order, Gemma 3 1b is the fastest, but more basic model, followed by E2B and with E4B to the head as the most complete.

On the Qwen side, it is a Chinese model developed by Alibaba, with 1.5 billion parameters, and quite logical precision. These are the models that the app brings, but we can install models on our own.

What I win and what I lose using the local

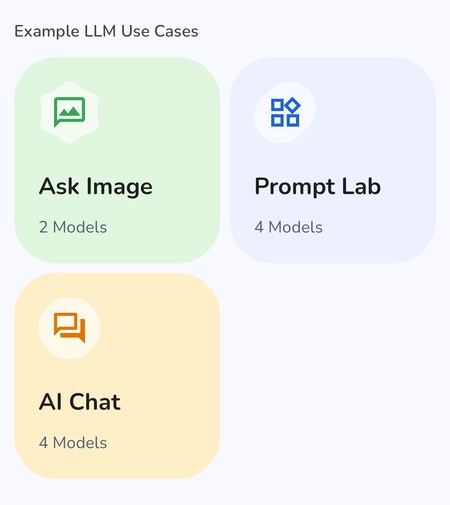

The Google app is designed to execute three scenarios of use:

- Images questions: problem solving, identifying objects, etc.

- Prompt Lab for summaries, rewriting texts and code generation.

- Ai Chat: Multi -urnal conversations with AI

The local AI is somewhat more limited in these scenarios of use, but in return it is more private. It runs 100% on your phone, without connection to servers. This minimizes response latency, something that I wanted to verify with a fairly simple test: the translation of a text not too extensive.

“If the computing power on each chip continue to grow exponentially, Moore real Demanded Were Useful in Business Applications, Too. Defense Contractors Thought About Chips Mostly As a Product that Could Replace Older Electronics in All The Military Systems.

“WHEN US DEFENSE SECRETARY ROBERT MCNAMARA REFORMED MILITARY PROCUREMENT TO CUT COSTS IN THE EARLY 1960S, CAUSING WHAT SUB IN THE ELECTRONICS INDUSTRY CALLED THE” MCNAMARA DEPRESSION, “FAIRCHILD’S VISION OF CHIPS FOR CIVILS SEEMED PRISON Off-The-Shelf Integrated Circuits for Civilian Customers Even Sold Products Below Manufacturing Cost, Hoping to convince more customers to trym. “

- Chatgpt (4th): 5 seconds

- Deepseek: 19 seconds

- The local Google (Gemma 3 1b): has not understood the instruction.

- Google local (Gemma 3 E2b): 26 seconds.

- Google local (Gemma 3 E4b): 34 seconds.

- Google local (Qwen 2.5): 16 seconds.

The results are quite irregularand that is that the app is in its initial stage. The main problem I have found is that sometimes it is difficult for him to execute the model, even in cases of light models such as Qwen. In a Samsung Galaxy S25 Ultra I had to restart the app on some occasion, since I did not execute the prompt.

I have also wanted to prove how simple problems solve with the visual understanding of images, using the heavy model E4B of Gemma. It has also been quite disaster. In the first attempt I have asked him to solve all the problems. He has succeeded. In the second, I have asked him to solve only the first. He was wrong and, after telling him that it was wrong, he has err.

Yes, he does a good job recognizing elements in the images, but it still has a hard time going further (Google just lets his Gemma models choose for these tasks).

The conversational assistant works well, with its limitations, but well. It serves to talk and help us with any task that we can think of that you do not need a real -time search on the Internet.

If you are especially jealous with your privacy, and want to execute the without any server connection, it is a relatively valid alternative. Google has this app as a test laboratory. At least for now it is not a real alternative, much less, to Gemini.

Image | Xataka

GIPHY App Key not set. Please check settings