Today I have asked Gemini twice what it consisted of the three body problem. The first time I asked the conventional Gemini, who after thinking for a few seconds gave me a text answer, well structured but which at first scared me a little because it even included equations. This was what the response looked like:

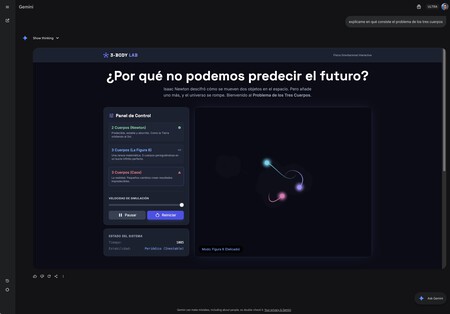

Then I decided to ask Gemini again, but this time taking advantage of the new feature called Dynamic View (Dynamic View). Google introduced this option a few days ago, and here Gemini does not respond in text mode, but visually. This was what the response looked like:

So that I could understand that concept, he created a simulation where I could switch between different simulation modes and speeds. And after that, he complemented this simulation with short texts that explained what happens when there are only two bodies (like the Earth and the Moon) and when there are three bodies and the butterfly effect is experienced.

The system becomes so chaotic and complex that triple star systems in the universe are unstable. I didn’t understand it as much with the formula, but with that simulation, I did.

This is a clear example of where the tables are going in the world of artificial intelligence chatbots. In that future that Gemini proposes, the conversation can become—if we wish— much more visual and interactive. Almost like a game, because by modifying the simulation we can check the effect of that change in real time. It’s easier to “click” and understand the concept, and that, dear readers, is addictive.

Google talked about all this in the presentation of the feature last week, explaining how this option “allows AI models to create immersive experiences, interactive tools and simulations, completely generated in real time for any prompt.”

Well, indeed, this is how ‘How I Met Your Mother’ ended, although I have hidden the text so as not to spoil that ending for those who have not seen the series. If you haven’t done it, I recommend it 😉

The practical applications of something like this are, once again, almost limitless. One can apply these dynamic views to understand probability theory, to get fashion tips, or to remember how ‘How I Met Your Mother’ ended.

Already put I have asked the impossible: explain to me the movie ‘Tenet‘. He tried it with a good visual scheme (the video below shows that interactive response), but it didn’t help me much because I’m afraid that movie is absolutely inexplicable. I’m not saying it: Nolan says it.

Visual and interactive summaries take a few seconds to complete and are not suitable for the impatient, but once they do, the truth is that the answers do not disappoint because that interactivity and visual content enrich said answer and make it much more digestible and attractive for the user. It is the tiktokization of AI to make it even more direct.

This approach from Google once again demonstrates how strong the company has been for a few months. The Nano Banana phenomenon turned it into a company that finally demonstrated its potential, and both Gemini 2.5 Flash and Pro a few months ago like now Gemini 3 – which certainly seems to be a step above its rivals – have confirmed the optimism surrounding the company.

This latest innovation from Dynamic View It is one of the most powerful and disruptive we have seen in the use of AI in these three years, and follows the path that the company has already outlined with the fabulous NotebookLM.

Let’s go shopping with ChatGPT

Google, of course, is not alone in that effort. OpenAI has been an absolute benchmark in the productization of AI, and with ChatGPT it got it right from the first moment in that user experience that made us want to use the chatbot for more and more things.

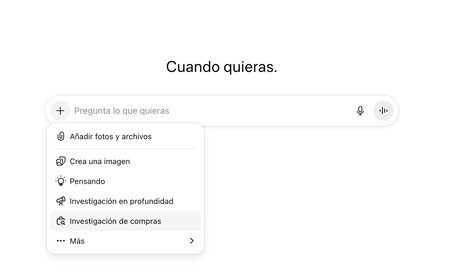

The company led by Sam Altman has also been putting forward interesting proposals for a long time to be able to apply AI to all types of scenarios, and now it has come up with a new one that is unique these days of Black Friday: a “Purchase Research” mode that goes beyond finding products for us.

And it goes further because it does not stick to our initial prompt, but rather asks us about that prompt. For example, I am looking for a 27-inch monitor with 1440p resolution (QHD) that is cheap and for mostly office use. And that’s what I put in the search engine.

The surprises came from there, because in that mode ChatGPT does not give you the answer directly, but it asks you some more questions in “survey” mode asking yourself boxes to answer. Preferred connectivity? (HDMI) What budget do you have? (less than 150 euros). Which panel do you prefer? (I don’t care).

After these questions, ChatGPT presents some preliminary options on the screen so that you can tell it if its results are on the right track or not (and if they are not, it asks you why, for example by price or features).

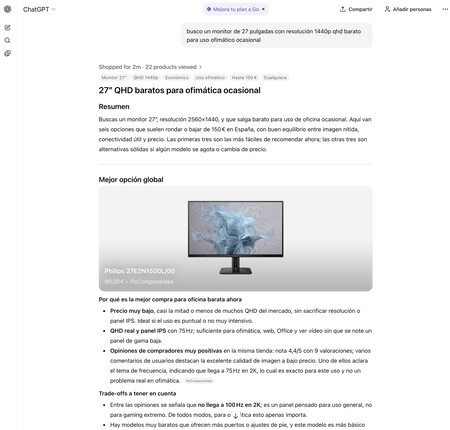

After two and a half minutes, the chatbot presented an interesting personalized shopping guide in which it recommended me this Philips 27E2N1500L/00 that is 99 euros and that I will probably end up buying.

Obviously this OpenAI tool is interesting for users, but also for OpenAI, because it is one more move in that strategy of becoming our indispensable ally for all types of purchases.

ChatGPT wants to be a useful shopping assistant that helps us find products… and that along the way give a commission to OpenAI. We already saw it with Instant Checkout, and this is another move that points to that promising line of income for the company, which certainly needs it like eating.

But beyond that, the Purchase Research mode is another good example of how these searches no longer stop at what we ask, but instead ask us questions to better understand what we want and then give the best answer with visual and interactive elements.

The prompt is no longer so important in scenarios like this, because if you haven’t fine-tuned it the first time, the chatbot will talk to you to polish and perfect it. And that is also important, because it makes the use of AI models even simpler and more accessible. Before we could be criticized for saying “the prompt I used wasn’t very good”, but now even that is no longer true.

It is, we insist, the beginning of a new era for AI chatbots. One in which they become a little more curious and questioning with good reason: give us more useful, visual, interactive and fun answers than ever. Bright.

GIPHY App Key not set. Please check settings