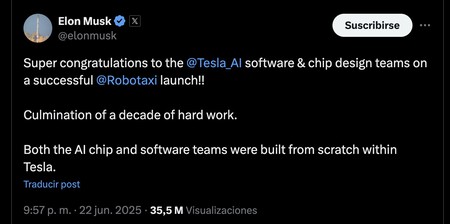

Elon Musk took chest on Sunday. And rightly. Your Robotaxis at last They began to workand he celebrated it with A message in x in which he congratulated the team in charge of the launch. The reason is logical, because those engineers have not only achieved that Tesla’s robotaxis work, but also do so with a chip and software developed completely by them.

Tesla already has robotaxis. “It is good to have an automatic pilot in airplanes, we should have it in cars.” The phrase is from Elon Musk, which in 2013 began its particular ambition to get Tesla to drive alone. Since then He has not stopped doing promises that he has not fulfilled, but these days he achieved a singular advance: inaugurating his robotaxis service … although again was different from the one who promised. These vehicles began shooting last Sunday in Austin, Texas, although with strong restrictions and in Very specific spaces of the city.

The long history of Autopilot. Those 2013 statements began a remarkable trajectory for Tesla, which soon decided that he wanted to ride him on his own and not depend on anyone. Although initially they allied With Mobileye To be able to use their sensors and hardware, the thing changed as of July 2016. It was then that Tesla began to develop her own hardware for her autonomous driving systems.

The evolution of your “hardware”. At the end of 2016 the First Hardware Own Platform (“Hardware 2” or HW2, successor of “hardware 1” that was based on Mobileye solutions) in Tesla vehicles. In August 2017, a review called HW2.5 would arrive, but The version most important to date It has been “Hardware 3” (HW3), launched in March 2019 with 14 Nm chips and has been the one that has integrated in its vehicles until the beginning of 2023. It was then that “Hardware 4” (HW4) arrived that is Tesla’s great bet for its current systems. The next iteration It will arrive Predictably in three or four years, and will also be based on chips manufactured by Samsung.

A very capable chip. In both HW3 and HW4 the used chips have been manufactured by Samsung and are derived from the exynos of this firm. In HW3 it was used A 14 NM chip With 12 CPU nuclei, 2 neural network processors (NN) and a 3 3 -tops IA calculation power. With the new HW4 generation the chip is made of 7 Nm photolithography, it has 20 CPU nuclei, 3 NN and processors A power of 50 tops. The cameras also have better sensors (from 1.2 MP to 5 MP), and the Tesla Vision system is also more precise. By the way: this It is not the only chip of AI that the company designs.

Autonomy level 2. That has not enough to increase the level of autonomy of its autonomous driving software, which at the moment seems to be level 2, Behind Mercedes or Ford. At this time, FSD can control the car autonomously, but the driver must monitor that driving at all times and be prepared to take control. A comparative analysis of various autonomous driving systems indicated in March 2024 that at that time FSD had a “poor” capacity. Actually most of the analyzed had that same qualification, and only one (that of the Lexus) was considered decent.

The software also counts (and much). But next to that work in the hardware section is the software platform on which Full Self-Driving (FSD) is based, its autonomous driving system. Makes use of deep neural networks (DNN) trained with data from the billions of kilometers that the global Tesla fleet has generated. In its evolution was especially the jump from FSD V11 to FSD V12, which brought the End-To-End Architecture in which vehicle controls are handled directly by neuronal networks in an integral way, instead of depending on rules specifically programmed by developers or independent modules for perception, planning and driving control. FSD iterations are constant and are offered as OTA updates to Tesla clients, which can even try them –Not without surprises– In your beta versions.

The dojo supercomputer. To complete this all Tesla project announced in 2021 Supercomputator Tesla Dojowhich in April 2024 already had 35,000 Nvidiah100 chips. Its objective is to train Tesla automatic learning models to improve FSD.

Of lidar, nothing. Tesla decided from the beginning not to opt for the Lidar that for example is the total center of the Waymo systems. Google engineers already told Musk that “This is not done“But he has always opted for not using such technology despite his Many advantages. What they did use with HW1 and HW2 were a combined radar system, chambers and ultrasonic sensors, but in 2021 They left the radar to focus on 8 cameras and 12 ultrasonic sensors. That allows undoubtedly lowering costs in terms of hardware components, and to compensate for the absence of these Tesla sensors, it plays with an advantage: data that collect from your FSD users and With those who train their neural networks. However, some independent analysis indicate that Tesla’s decision generates avoidable accidents.

In Xataka | AI agents are promising. But as in Tesla’s FSD, you better not take your hands from the steering wheel

GIPHY App Key not set. Please check settings