Alfa. Give answers that are not true. Invents, and also does it with a simply amazing ease. The answers seem coherent thanks to that apparent coherence and security, but the truth is that that can end up causing disturbing problems. For example, that you recommend you glue on pizza so that the cheese is well stuck.

Hallucinations are not a mystical error. In Openai they know the problem well, and they just Publish a report in which they analyze the causes of hallucinations. According to the study, these arise from “statistical pressures” in the training and evaluation stages.

Award for guessing. The problem, they explain, is that in these procedures it is being rewarded that the “guess” instead of admitting that there may be uncertainty in the answers, “as when a student faces a difficult question in an exam” and answers some of the available options To see if you are lucky and hire. In Openai they point out how AI does something similar in those cases, and in training it is encouraged to answer the answer instead of answering with a simple “I don’t know.”

Damn probabilities. In the pre-training phase the models learn the distribution of language from a large text corpus. And that is where the authors emphasize that although the data of origin are completely free of errors, the statistical techniques used They cause the model to make mistakes. The generation of a valid text is much more complex than answering a simple question with a yes or a not as “is this output valid?”

Predicting the word has trap. Language models learn to “speak” with preventive, in which they learn to predict the next word of a phrase thanks to the intake of huge amounts of text. There are no “true/false” labels in each sentence with which it is trained, only “positive (valid) examples of language. That makes it harder to avoid hallucinations, but in Openai they think they have a possible response that in fact they have already applied in GPT-5.

A new training. To mitigate the problem in Openai they propose to introduce a binary classification that they call “IS-ID-VALID” (IIV, “Is it valid?”), Which trains a model to distinguish between valid and erroneous responses.

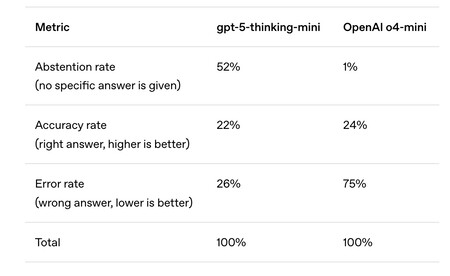

GPT-5 is somewhat more humble. When there is a correct answer, Openai models classify the answers that the model gives in three groups: correct, errors, and abstentions, which reflect some “humility.” According to its data, GPT-5 has improved in terms of hallucinations rate because in its tests much more abstain (52%) than O4-mini (1%), for example. Although O4-mini is slightly better in correct answers, It is much worse in error rate.

Benchmarks reward the successes. The study also indicates how The benchmarks and the technical cards of the current models (Model Cards) focus completely on the successes. In this way, although the models of AI effectively improve and succeed more and more, they continue to hallucinate and there are hardly any data on these hallucinations rates that should be replaced by a simple “I do not know.”

{“videoid”: “x8jpy2b”, “Autoplay”: fals, “title”: “What is behind it like chatgpt, dall-e or midjourney? | artificial intelligence”, “tag”: “Webedia-prod”, “Duration”: “1173”}

Easy solution. But as is the case in testing tests, there is a way to prevent Students play the quiniela: Penalize errors rather than uncertainty. In these exams, answering well can use a point but answering badly can subtract 0.5 points and not answer zero points. If you don’t know the answer, guessing can be very expensive. Well, with AI models, the same.

(Function () {Window._js_modules = Window._js_modules || {}; var headelement = document.getelegsbytagname (‘head’) (0); if (_js_modules.instagram) {var instagramscript = Document.Createlement (‘script’); }}) ();

It was originally posted in

Xataka

by

Javier Pastor

.

GIPHY App Key not set. Please check settings