The PC wants to become a device that is somewhat different from the one we knew. At Microsoft they have been aiming for this metamorphosis for some time, and now those responsible tell us about how the fundamental component of it It will be AI and, more specifically, Copilot.

The integration of Copilot into PCs and Windows 11 is being relatively slow, but Microsoft believes it is time to take a significant leap. One that affects not only how we will interact with the PC, but how we will work with it. Or rather, how we won’t work (as much).

“Hey, Copilot”: the voice as a substitute (or complement) for the mouse and keyboard

The mouse and keyboard transformed our lives and allowed us to get the most out of our machines. For decades they have been the key elements to communicate with machines, but that is gradually beginning to change.

At Microsoft they know this and in fact they have been working for some time on a new paradigm in which that mouse and keyboard take a backseat. Instead, what comes to prevail is the voiceand although that transition will probably be slow and gradual, Microsoft is clear about it. According to the company, the PC must transform and be able to do three things:

- That we can interact with it naturally both with text and voice, and that it understands us

- That the PC can see what we see and offer guided support based on that information

- That can perform actions and complete tasks for our benefit

To boost this interaction, Microsoft has launched an option that allows us to start talking to our PC by saying the words “Hey, Copilot”. If we have that option activated in the Settings of our Copilot application, we will access that feature whenever we want, which is displayed in Windows 11 with an on-screen microphone in addition to a small sound warning.

That solves that first capability that Microsoft talks about. For the second, the Redmond company also has its solution. Is called Copilot Vision and it was presented a few months ago in its previous version. Now Microsoft says that this option will be available “in all markets where Copilot is available,” and will allow Windows AI to access the desktop and applications we are using.

Thanks to this option, Copilot Vision will see our screen as we see it and thanks to this it will theoretically be able to help us with any questions. It’s the same idea as OpenAI already raised with Operator and that Anthropic too poses with your Computer Use for a long time.

Precisely to strengthen these assistance tasks in real time we have the so-called Highlights, which allow us to ask to Copilot “teach me how (do this in this app)”.

We can also give it access to Word, Excel or Powerpoint and help us analyze a presentation or better write a paragraph of the document we are working on. Although Copilot Vision was based on voice interaction until now, Microsoft will soon add the ability to interact with this system in a chat window in case we prefer to use the keyboard and text to complete that interaction.

Microsoft’s ambition to make Copilot the center of our experience with Windows 11 is also noticeable in the presence of the “Ask Copilot” button on the taskbar. With this access they want to turn that taskbar into a “dynamic hub” that allows us to do more with less effort. To use this option we will have to activate it proactively in Windows Settings.

Copilot Actions: when the computer does everything for you

Microsoft is also targeting another of the most promising trends in this segment: the ability for the AI model take control of your browser and even your computer to complete actions for you.

This type of feature is now more integrated than ever into Windows 11 with Copilot Actions, “an AI agent that completes tasks for you by interacting with your applications and files, using vision and advanced reasoning to click, write and scroll as a human would do,” they explain at Microsoft.

Already we saw those Copilot Actions in a previous version (only for the browser) in April, but now they are making the leap to be able to operate on all the apps on our PC. That means that (if we want) the AI stops being passive—it answers questions, and that’s it—to become a proactive assistant which can carry out tasks such as updating documents, organizing files, sending emails or booking a flight.

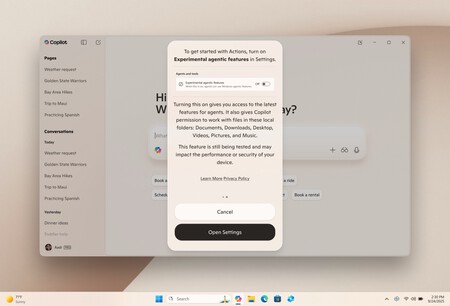

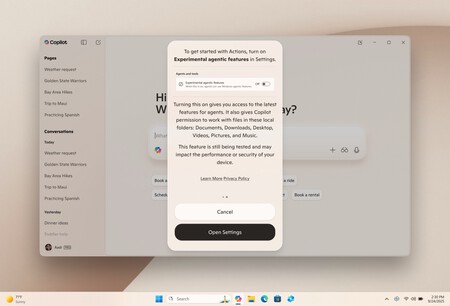

In order to use this option, the user must give permission for the agent to have access to the data and applications on the PC, something that can undoubtedly cause concern to users who fear that this AI will make mistakes or perhaps leak sensitive data.

To avoid this and guarantee that security, Microsoft applies different techniques. To start, it uses agent accounts that are different from the account we use on our device.

Agents operate in a contained and protected workspace, which isolates and limits their access. Besides They start their activity with limited permissions and they can only access other resources explicitly when we allow them to, such as when they try to access our files. In fact, in the preview version of Copilot Actions, the agent can only access very specific folders such as Documents, Downloads, Desktop or Pictures.

In addition, the agents must be “signed” by a trusted source, something similar to what happens with apps that are distributed in application stores such as the Windows Store, Google Play or the App Store.

Microsoft’s ambition is clear, but there is a problem: at the moment in Spain and the European Union we still cannot count on the majority of Copilot options in Windows 11. We will have to continue waiting.

GIPHY App Key not set. Please check settings