OpenAi has announced New models Open Source that anyone can download and install on their computer: GPT-Oss. With these already on the street, it is an excellent opportunity to start stirring with the The premisesthat is to say, Executed on our computerso today we are going to teach you to install and use them.

Differences between the two models

Although they are called in a similar way, GPT-Oss-120B and GPT-Oss-20B are not exactly the same nor have the same requirements. The first model, GPT-Oss-120b, reaches a parity near OpenAi O4-mini and requires at least 60 GB of graphic memory.

Having your own chatgpt at home is easy, but it requires a team at height | Image: Xataka

His little brother, GPT-Oss-20B, is somewhat less capable (similar to O3-mini, according to OpenAI), but can be executed on devices Edge. In other words, it can be executed on your own computer whenever it has at least 16 GB of memory, preferably graphic.

In summary:

- GPT-Oss-120b: Large model, need at least 60 GB of vram or unidicada memory and is not suitable for consumer computers.

- GPT-Oss-20b: smaller model, need 16 GB of vram or unified memory and is suitable for consumer computers.

The one we are going to use, for obvious reasons, is GPT-Oss-20b.

Considerations to take into account

Executing an AI like in local is an intensive process that can cause, and surely cause, that your computer slows down a lot. Although you could execute it having 16 GB of RAM, the ideal is that your team has A high -end GPU.

What will happen if your computer has less than 16 GB of vram memory? Than the tool will use RAMwhose figure must be equal to or greater than 16 GB. If not, the system will not work properly. As a general recommendation, the ideal is to dedicate all the possible resources of your computer to the execution of the model, so it closes everything that is not strictly necessary.

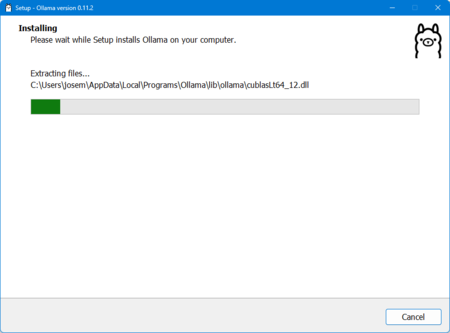

Install Ollama on your computer

OLLAMA Installation | Image: Xataka

For this tutorial we will use a well -known application: Ollama. It is an Open Source platform that simplifies, and much, the installation, access and use of LLMS (Large Language Models). Let’s say he is an executor of models.

Chatgpt is an online platform through which we interact with a model, such as GPT-4O. Ollama is the same, But at home and with the models we have installed on our computer. It is a free, open source software and available for Windows, Mac and Linux.

Download GPT-Oss

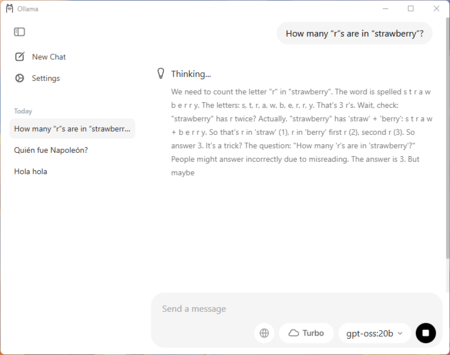

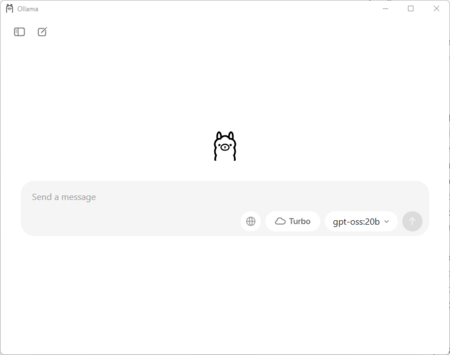

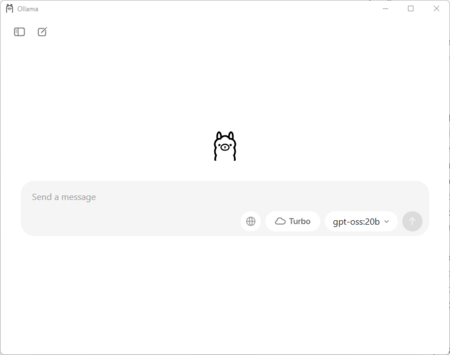

Once we have downloaded and installed the program on our computer we will find an interface like this. If you wish, you can also use ancient ollama through the Command interfacebut the truth is that the graphic interface is much more pleasant.

Main interface of Ollama | Image: Xataka

If we look, we will see a drop -down in the lower right area with the name of the model we are using or that, rather we will use.

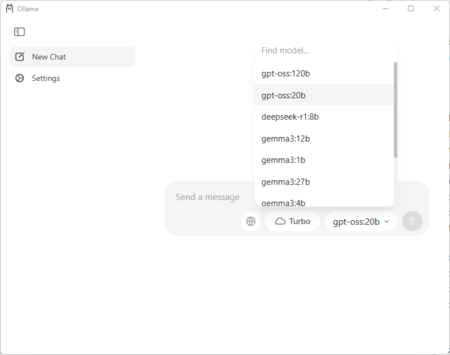

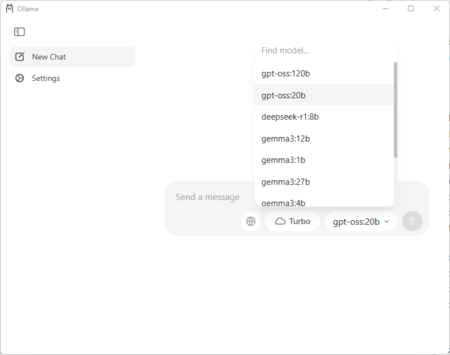

Access to the different AI models from Ollama | Image: Xataka

If we click on the drop -down we can access a good handful of models, such as Deepseek R1, Gemma either Qwen. In the case, we are interested in selecting “GPT-Oss: 20b“

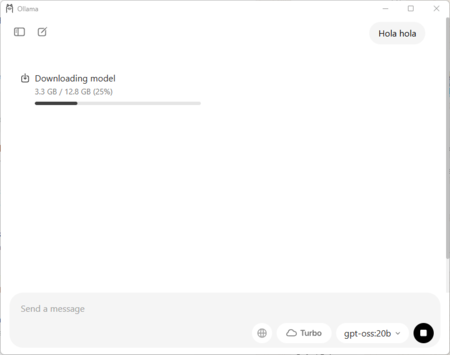

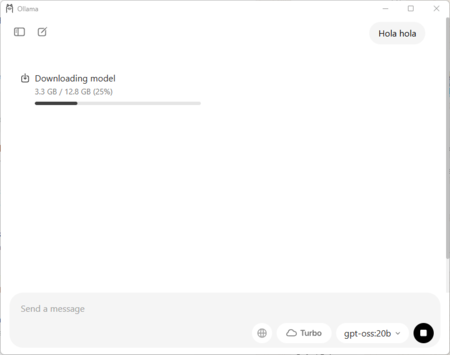

Download of the model, armate of patience | Image: Xataka

Having selected “GPT-Oss: 20B”, it will be enough to send a message in the chat to begin the download of the model. At patience, because it weighs 12.8 GB and can take a while.

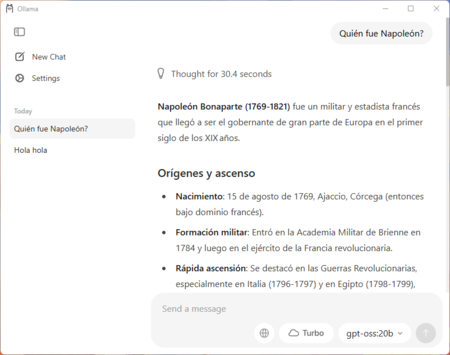

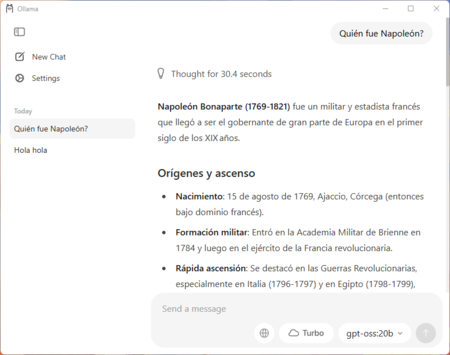

Talking to GPT-Oss-20b through Ollama | Image: Xataka

Once it is installed, you can start talking with AI as if it were Chatgpt. Of course, if your GPU does not meet the minimum requirements you will see that it is much slower than chatgpt. Not surprisingly, you are running the model on your computer, not in a data macrocenter full of the latest in Dedicated Gpus of Nvidia.

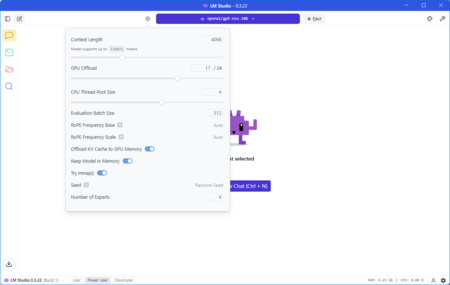

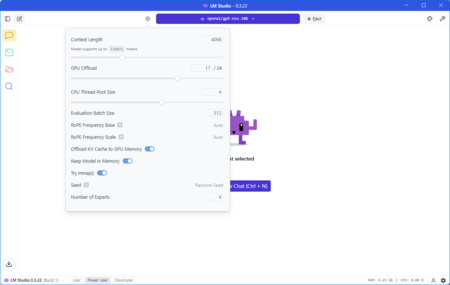

Another option: LM Studio

Lm Studio | Image: Xataka

Ollama has the advantage of being intuitive, simple and direct. If we want more options, a much more complete program is LM Studio. This is available for Windows, Linux and Mac and, as Ollama, is able to manage several models, GPT-Oss: 20b among them.

It is a more advanced application that allows us to better adjust both the behavior of our computer and that of the model, although squeezing it to the maximum requires more advanced knowledge.

Cover image | Xataka

In Xataka | How to move from image to video using artificial intelligence: 14 essential free tools

GIPHY App Key not set. Please check settings