Think for a moment about the artificial intelligence models you have used in recent days. It may have been through ChatGPT, Gemini either Claudeor perhaps through tools like Codex, Claude Code or AI Cursor. In practice, the choice is usually simple: we end up using what best fits what we need at any given moment, almost without stopping to think about the technology behind it.

However, that balance changes frequently. Each new model that appears promises improvements, new capabilities or different ways of working, and with it a fairly direct question returns: if it is worth trying, if it can really offer us something better or if what we already use is still enough. Claude Sonnet 4.6 just came to the foreand this is how it is positioned against the competition.

Claude Sonnet’s starting point 4.6. Here we find what Anthropic describes as a transversal improvement in capabilities, which includes advances in coding, computer use, long-context reasoning, agent planning, and tasks typical of intellectual and creative work. Added to this set is a context window of up to one million tokens in beta, designed to process entire code bases, extensive contracts or large collections of information without fragmentation.

Three levels, the same map. To understand where Sonnet 4.6 fits in, it’s worth looking at how Anthropic tends to organize its family of models into different levels with different objectives. Haiku prioritizes speed and efficiency, Opus is reserved for tasks that require the deepest reasoning, and Sonnet occupies the middle ground, designed as a balance between capacity and operating cost. Within this framework, the company maintains that the new Sonnet comes close in some real jobs to the performance previously associated with the Opus, an ambitious claim.

When AI starts using the computer. One of the improvements that Anthropic highlights most strongly in Sonnet 4.6 is its progress in what it calls computer usethat is, the ability of the model to interact with software in a way similar to a person, without depending on APIs designed specifically for automation. This progress is supported by references such as OSWorld-Verified, a testing environment with real applications where the Sonnet family has been improving steadily over several months. The company also recognizes limits and risks that we have talked about before, such as attempts at manipulation through prompt injection.

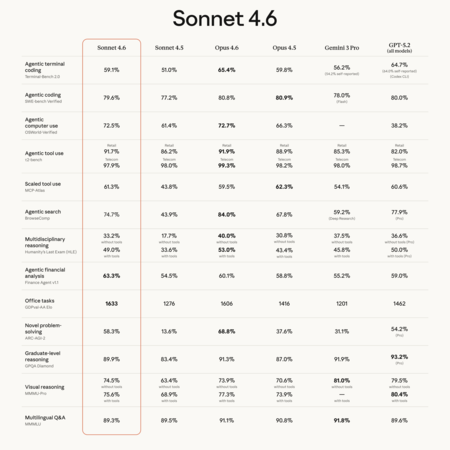

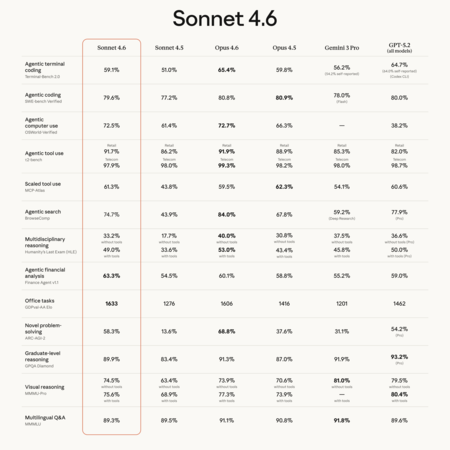

Searching for the ‘best’ model. At this point, the relevant question stops being how much Sonnet 4.6 has improved in absolute terms and begins to focus on how it is compared to the other large models that today compete for the same space of use. The comparison is not simple nor does it allow for a single winner, because each system excels in different areas and responds to different technical priorities. That is why it is advisable to read the benchmarks with a practical perspective, identifying in which specific tasks the real differences appear.

Where each model stands out. The direct comparison with GPT-5.2 draws a distribution of strengths rather than a clear victory. According to the table published by Anthropic, Sonnet 4.6 stands out especially widely in the autonomous use of the computer measured in OSWorld-Verified, in addition to showing an advantage in office tasks (GDPval-AA Elo) and in some analysis or problem solving scenarios (Finance Agent v1.1, ARC-AGI-2). GPT-5.2, for its part, maintains better results in graduate-level reasoning (GPQA Diamond), visual comprehension (MMMU-Pro) and terminal programming (Terminal-Bench 2.0), with nuances such as results marked as Pro in some tests (BrowseComp, HLE) or self-reported grades in Terminal-Bench 2.0.

The comparison with Gemini 3 Pro introduces a different nuance, because here the advantages are concentrated above all in the field of reasoning and general knowledge. The Google model obtains better results in graduate-level reasoning tests (GPQA Diamond) and in wide-ranging multilingual questionnaires (MMMLU), in addition to being ahead in visual reasoning without tools (MMMU-Pro). Sonnet 4.6, on the other hand, retains a certain advantage when external tools or scenarios closer to the applied work come into play. The absence of some comparable data in the table itself forces, in any case, to interpret this duel with caution.

Where Sonnet 4.6 can be used. The new model is available in all Claude plans, including the free level, where it also becomes the default option within claude.ai and Claude Cowork. It can also be used through Claude Code, the API and the main cloud platforms, maintaining the same price as the Sonnet 4.5 version.

After going through capabilities, limits and comparisons, the real decision returns to the user’s daily life. Sonnet 4.6 aims to be especially useful in productive tasks, direct interaction with software and long workflows, while GPT-5.2 and Gemini 3 Pro maintain advantages in academic reasoning, visual comprehension or general knowledge depending on the test considered. No one dominates all fronts, and that fragmentation defines the current moment of artificial intelligence.

Images | Anthropic

In Xataka | In 2025, AI seemed to have hit a wall of progress. A volatilized wall in February 2026

GIPHY App Key not set. Please check settings