Imagine a missile guided by a dove. It sounds absurd, but it happened in the middle of war: someone proposed to train them to Picute the target from a screen and thus redirect the projectile. The system was never usedbut left something more powerful than the anecdote: A way of learning based on proof, error and reward. The comparison helps to understand logic, but it is not literal: today there are no birds in algorithms; What is maintained is the idea of strengthening behaviors through signals. That logic, simple and direct, is the one that many artificial intelligence models follow. What was previously an answer conditioned by food, is now a score, a preference or human indication that the model learns to pursue.

The test and reinforcement mechanism was not lost over time. In the 1940s and 1950s, the American psychologist Burrhus Frederic Skinner formalized that idea with his theory of “operant conditioning”: A behavior increases its probability of repeating itself if its consequences are positive. Although behaviorism was displaced by approaches focused on mental processes, its logic found a new field in computer science. Since the end of the seventies and, above all, in the eighties and ninety, Richard Sutton and Andrew Barto applied it to the design of artificial agents capable of acting, receiving a signal and adjust ‘Reinforcement Learning: An Introduction’.

As Mit Technology Review points outthe idea of molding behaviors without resorting to fixed rules became a useful tool to teach machines. From the 1980s, reinforcement learning began to be implemented in algorithms that explore simulated environments, fail, receive feedback and try again. They do not follow human instructions step by step: learn based on the result. This approach proved to be especially effective in tasks with clear objectives, such as games. And it was there that he gave one of his most visible jumps.

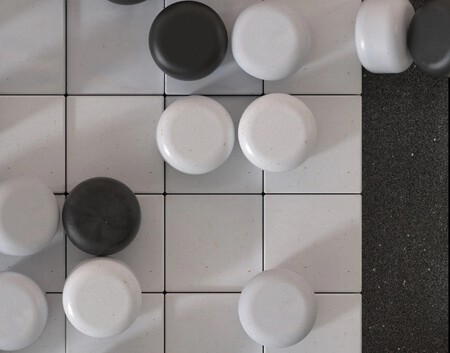

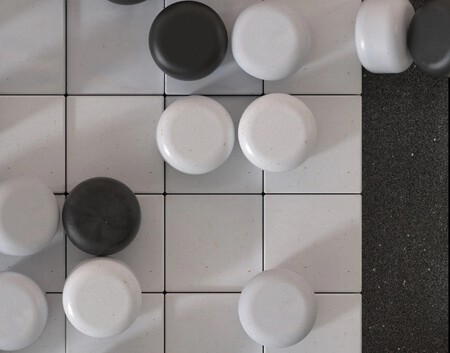

Alphago’s story marked a before and after in artificial intelligence. In March 2016, he beat South Korean Lee Sedol 4-1 in a series of Go games. He succeeded by combining supervised learning of human games and reinforcement learning. A year later, Deepmind was one step further with Alphago Zero. Instead of training with human data, he started from scratch and learned playing against himself: each victory reinforced his strategy, each defeat the corregía. In 40 days he surpassed not only the human championbut also to all the previous versions of Alphago himself.

Today, reinforcement learning is not only used in games; It is also used to refine the models behind services such as Chatgpt. The OpenAI system incorporates a technique known as Reinforcement learning with human feedback (RLHF): people compare model responses and those preferences become a signal that guides their evolution. According to Openai, this phase seeks to align the behavior of the model with the user’s intention. It does not learn explicit rules, but patterns that maximize the reward, that is, what receives better assessments.

Reinforcement works, but it doesn’t work for everything. Its effectiveness depends on the signal being well defined and represents the objective well. If it is confused or poorly designed, andThe system can adopt ineffective or even problematic strategies. This has fed a scientific debate. Some biologists have indicated the paradox: Association learning is considered limited to animals, but is celebrated in AI when it produces advanced results. It is no accident that great technology have adopted this approach. More than 80 years after that experiment with pigeons, their pecks are still present in the technology we use every day.

Images | Nist Museum | Google | Xataka with Gemini 2.5 Pro

In Xataka | The strange case of the diminutive AI: how tiny models are taking the colors to the mastodons of the AI

GIPHY App Key not set. Please check settings