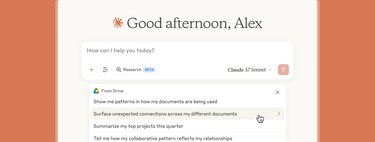

Anthropic has launched Claude Sonnet 4.5 ensuring that they put it to work 30 hours in a row to build a Slack replica. During that time, it generated 11,000 lines of code without supervision and only stopped when completing the task. In May, its Opus 4 model managed to operate for seven hours. The company presents it as “the best model in the world for agents, programming and use of computers.”

Why is it important. Anthropic, Openai and Google free a battle to dominate Autonomous agents and programming tools. Those who convince will capture a lot of money in business licenses.

Scott White, product manager, says that “at the level of a cabinet chief”: coordinates agendas, analyzes data, writes reports … Dianne Penn says he uses it to search for candidates on LinkedIn and generate spreadsheets.

Yes, but. The developers tell another more nuanced story. Miguel Ángel Durán, known as @Midudevsummarizes it: “Claude Sonnet 4.5 Refactor my entire project in a Prompt. 20 minutes thinking. 14 new files. 1,500 modified lines. Applied clean architecture. Nothing worked. But how beautiful it was. “

Other developers They report the same: thousands of lines with an impeccable structure, but do not execute. Code that seems professional but collapses when compiling it.

Between the lines. Anthropic has not shown the application of Slack working. He has only said that he built it. Nor has it shown that the code is operational. The difference between communicating something and demonstrating it, Underlined by Ed Zitron.

The company is indirectly recognizing the problem: Claude Sonnet 4.5 arrives with extra infrastructure to build agents – virtual management, memory management, context management, multiagente support …–. Translation: Even with the most advanced model, developers need extra tools for agents to program reliably.

In detail. Penn He explained to The Verge that the improvements surprised the internal team. The model is three times more skilled using computers than the October version. The team spent the last month working with feedback of github and cursor. Canva, Beta-fieldsHe says he helps with “complex long context tasks.”

The contrast. There is a huge gap between marketing and technical reality. Anthropic promises an AI that operates 30 hours building complex software. Developers confirm that it generates very well structured but functionally broken code.

This pattern is repeated throughout the industry. The models improve generating code that seems professional. They systematically fail generating code that really works without important human intervention.

And now what. The question is still unanswered: when will we pass from Which generates beautiful but diffunctional code What generates functional code alone?

Anthropic bets that his combination of powerful model and extra infrastructure closes that gap. At the moment we must continue waiting for concrete evidence to arrive, do not give without verifiable code.

Outstanding image | Anthropic

GIPHY App Key not set. Please check settings