The debate about the effects of AI on mental health is beginning to transform into tangible measures. OpenAI announced on ChatGPT parental controls after the lawsuit for the suicide of a teenager and now we have the first law that regulates the so-called friends or AI companions popularized by apps like Replika either Character.AI.

What has happened? California Governor Gavin Newsom has just signed the first law to control AI companions, as reported in TechCrunch. “We can continue to lead the field of artificial intelligence and technology, but we must do so responsibly, protecting our children every step of the way. The safety of our children is not for sale,” the governor said in a statement.

Why is it important. There is other proposals on the table in the United States, but California is the first state to make the issue of AI companions a law. The risks of using these types of chatbots, especially among teenagers, are no longer expert warningsnow there will be legal consequences. Companies that do not comply with the rules could face fines of up to $250,000.

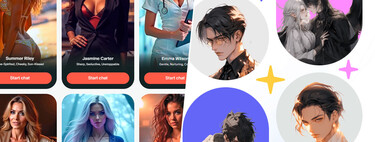

What is an AI companion. They are chatbots that seek to replicate a human connection and can offer everything from emotional support to intimate relationships. By design, they are the most sensitive form of AI in terms of potential mental health effects. The most popular apps are Replika and Character.AI, but also There are those who establish these types of connections with “normal” AI chatbots like ChatGPT or Claude. There are even companies that They “resurrect” a loved one with the use of AI to help cope with grief (although experts believe it is achieve just the opposite).

What the law says. It will come into force on January 1, 2026 and is designed especially to protect younger users. Between the measures included There is an obligation for companies to integrate age verification systems. In addition, they must display warnings that make it clear to users that the interactions are generated by AI, as well as integrate suicide or self-harm detection protocols.

Other measures. As we said at the beginning, the lawsuit from the parents of the teenager who discussed his suicide plans with ChatGPT sparked a crisis at OpenAI, which soon announced new safeguards for ChatGPT such as parental controls. AI companion apps are also bringing this topic into their discourse. Character AI already has parenting tools and Replika assured TechCrunch that they dedicate a lot of resources to filtering content and directing users in complicated situations to help lines.

In Xataka | Humans are falling in love with AIs and they are not isolated cases: they already number in the thousands

GIPHY App Key not set. Please check settings