AMD is doing things well, but even doing them still unable to compete with Nvidia. The company has just raised its renewed road map with promising models, but that is not a guarantee of anything to a NVIDIA that will not let its absolute leadership position escape.

The problem for AMD is not to be, but get others to take note. IDC consultancy data indicate that Nvidia dominates the AI chips market with 85.2% market dick, for 14.3% AMD. Other analysts like Jon Pedie Research go beyond and According to your data The NVIDIA quota in this segment is 92%.

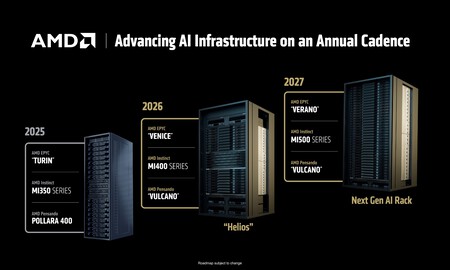

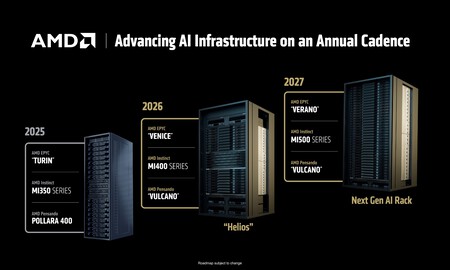

AMD instinct mi350 are just the beginning. The GPUS for IA, which AMD calls “accelerators”, follow its evolution. During the event they presented their family or Instinct Mi350 series with two variants, MI350X and MI355X. According to the manufacturer, these chips are four times higher in general performance with respect to the previous generation, but are up to 35 times more powerful in the field of inference AI (that is, in the practical use of models such as Chatgpt, which “infers” “their responses from our prompts). They have 288 GB of HBM3E memory and a memory bandwidth of 8 TB/s. Its yield is 18.45 pflops in FP4 precision and 9.2 pflops in precision FP8.

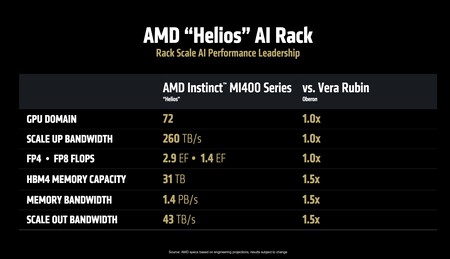

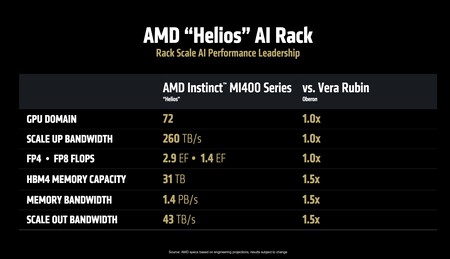

Instinct Mi400 in 2026. Next year the new family of AMD’s accelerators will arrive. It’s about future MI400 instinctwhich will arrive with up to 432 GB of HBM4 memory, 19.6 TB/s of bandwidth of that memory, and a performance of 40 pflops in precision FP4 and 20 Pflops in precision FP8. These monsters will be sold in future racks with infrastructure “Helios“, that You can house Up to 72 Mi400 with up to 260 TB/s total bandwidth thanks to its interconnection technology, Ultra Accelerator Link.

EPYC VENICE. AMD not only talked about GPUS: it also has its future processors for servers in data centers in full development. The Epyc Venice will arrive in 2026 and will be based on Zen 6 architecture. Among the variants, an especially spectacular with 256 cores that will offer up to 70% more performance compared to the previous generation. These processors will be built with future MI400 instinct. They are expected to be manufactured with the N2P (2 Nm) node of TSMC.

Helios against Oberon. The aforementioned Rack Helios will compete with not already with the current Nvidia AI server, the GB200 NVL72 which connects 36 CPUS Grace and 72 Gpus Blackwell. He is destined to compete with his successor, which has Oberon’s code name and will use IA B300 GPUS with Vera Rubin architecture. The yields and benefits of these future racks are absolutely dizzy, and for example their Precision Power FP8 is 1.4 Exaflops.

The same in some things, better in others. AMD promises to match NVIDIA in several sections, but also ensures that it will exceed it remarkably (50% more) in memory quantity and width, something crucial for training and inference AI. Be careful, because at the end of 2027 NVIDIA prepares the Rubin Ultra architecture, which promises racks with up to 5 Exaflops in FP8 precision, three times more than Helios or Oberon.

In 2027 we will have another “summer”. The AMD roadmap goes further, and they have already prepared the development of their new generation of chips for summer Epyc servers, which will replace the Epyc Venice. These CPUS will be paired with the future MI500X instinct, and it is expected – although it is not safe – that both types of chip take advantage of the one already announced TSMC A16 node (1.6 Nm), which will begin to be used at the end of 2026. There are no specifications for these developments, surely because they will depend on the manufacturing node that AMD ends up using to produce them.

Frantic race. All these ads show that AMD does not want to be left behind in that race to place their solutions in data centers worldwide. The Crusoe company, which is dedicated to the construction of large AI data centers, advertisement A few days ago I would spend 400 million dollars in AMD’s chips, and even Sam Altman, CEO of OpenAi, made a surprise appearance During the inaugural talk of the Lisa Su, CEO of AMD event. Altman said they will also use AMD chips in the data centers they use, and highlighted that the new AMD ia gpus “will be somewhat amazing.”

AMD presumes to be more efficient (and cheap). AMD’s message was clear during the event: its MI355 offer much more efficiency and are cheaper than NVIDIA B200 and GB200 with comparable yields. The sales prices of those GPUS are not known, but we do know that at the beginning of 2024 the MI300x of AMD They cost a maximum of $ 15,000 for the more than $ 40,000 that cost The NVIDIA H100.

The biggest challenge is still CUDA. The benefits of AMD AI chips are not in fact the problem of this company. Detailed studies revealed months ago that MI300X are clearly higher than NVIDIA H100 and H200 on performance and power. However, Nvidia has a Cudathe de facto standard in the industry for services of services and applications of AI. Using AMD native software is feasible, yes, but software experience, They assured in SEMIANALYSIS“Software is full of errors that make training (AI models) with AMD it is impossible.”

AMD’s hope is Rocm. In that AMD event also presented Rocm 7, the latest version from your own Open Source programming platform for your GPUS. In AMD they indicated that this version is 3.5 times more powerful than Rocm 6, and even claim that it is 30% more powerful than CUDA in the B200 when serving the model Deepseek R1. Even so, they indicate In another report of semi -health, it is still lower in some sections. Getting that component allows developers to take advantage of all the potential of AMD’s chips is precisely key to the future of those efforts. Even more than hardware, now AMD must squeeze in the software.

Image | AMD

In Xataka | The general director of AMD is in China with one purpose: to snatch the AI market to NVIDIA

GIPHY App Key not set. Please check settings