We usually talk to artificial intelligence as if I were one more person and sometimes we trust very personal information. However, we rarely stop to think about what happens to those conversations. Until now, the standard in good part of the sector had been to use them to train models, unless the user opposed. Anthropic represented an exception: Claude He had an explicit policy not to use the conversations of his private clients for this purpose. That exception has just broken. The reason is direct and forceful: the data is the raw material of the AI.

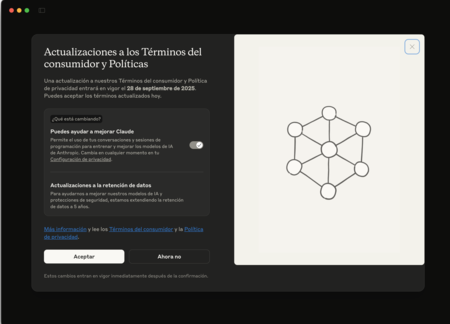

Anthropic has just announced in his official blog An update of its service conditions for consumers and their privacy policy. The users of the Free, Pro and Max plans, including the sessions in Claude Code, must explicitly accept or reject that their conversations are used for the training of future models. The company set the deadline until September 28, 2025 and warned that, after that date, it will be necessary to choose the preference to continue using Claude.

The Anthropic turn. The modification does not affect everyone equally: services subject to commercial terms are left out, such as Claude for Work, Claude Gov, Claude for Education, or access by API through third parties such as Amazon Bedrock or VerTex Ai from Google Cloud. Anthropic states that the new configuration will only apply to chats and code sessions initiated or retaken after accepting the conditions, and that old conversations without additional activity will not be used to train models. It is a relevant operational distinction: change acts on future activity.

Why this change? Anthropic points out that all language models “train using large amounts of data” and that real interactions offer valuable signals to improve capacities such as reasoning or code correction. At the same time, several specialists have been pointing to a structural problem: The open web is running out as a fresh and easily accessible source of informationso that companies look for new data paths to sustain the continuous improvement of the models. In that context, user conversations acquire strategic value.

Although Anthropic emphasizes security (improving Claude and reinforcing safeguards against harmful uses, such as scams and abuses), the decision probably also responds to competition: OpenAi and Google remain references in the field and require large volumes of interaction to advance. Without enough data, the distances in the AI race that we are witnessing live can increase.

Five years instead of thirty days. Next to the training permit, Anthropic has expanded the retention period for shared data for improvement purposes: five years if the user agrees to participatecompared to 30 days that govern if that option is not activated. The company also specifies that the eliminated chats will not be included in future training and that the feedback Envoy can also be kept. It also states that it combines automated processes and tools to filter or obfuscate sensitive information that does not sell user data to third parties.

Images | Claude | Screen capture

GIPHY App Key not set. Please check settings