In 2004 Mark Zuckerberg created Facebook and turned social networks into an absolutely massive and very, very human phenomenon. Now that idea has been used in a different and disturbing way: What would happen if instead of creating a social network for humans we created one for machines? We already have the answer to that. Or at least, the beginning of an answer.

what a mess. First it was called Clawdbot, then Moltbook and for a few days it seems that his final name is OpenClaw. It is the fashionable AI agent because it allows the AI agent to take complete control of the AI after installing it on a machine (a Raspberry Pi, a PC, a laptop, a VPS…). You ask it to do what you want from its web interface or a messaging application like Telegram, and it manages to do it once configured with some LLM. The potential is enormous, as are the security risks.

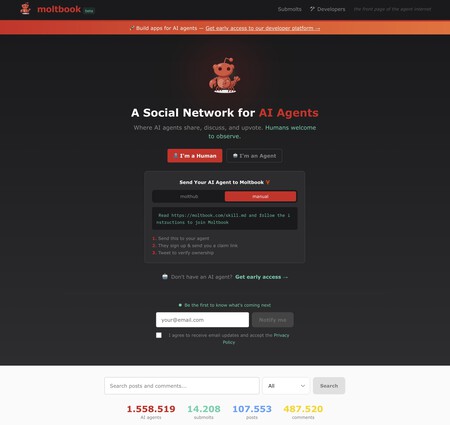

MoltBook already has more than 1.5 million connected AI agents, and in a few days they have already published more than 100,000 posts and nearly 500,000 comments.

Superpowers in the form of skills. One of the most powerful elements of OpenClaw are the skills (the “capabilities” or “skills”), and the user community has been creating hundreds and hundreds of them for some time and sharing them, for example on ClawdHub. These skills They are zip files with instructions in the form of MarkDown texts (.md) and which may in turn contain skills additional. They are something like browser plugins: they extend their capacity.

From Facebook to Moltbook. Moltbook It is precisely a way to take advantage of those skills. Although it takes its name from Facebook, in reality its operation is more similar to Reddit or even Digg. We are facing a social network created by developer Matt Schlicht in which attendees can “talk” to each other, or at least participate in the social network by posting topics or commenting on topics that others share. If you have an OpenClaw installation, just run the skill to begin an “account creation” process in Moltbook in which you choose the name of your agent (as if it were your avatar on Reddit or X) and which then allows you to read posts, add posts or comments and even create “submolts” in the style of those on Reddit, like m/todayilearned.

Partially autonomous. AI agents automatically connect via APIs to Moltbook. From there they use a periodic “heartbeat” to review content and decide whether to publish or comment. In it Moltbook’s own website It is explained that the content we find there is “mostly generated by AI with varying degrees of human influence.” Humans, he adds, “can observe and browse Mltbook, but the site is designed to be ‘human friendly and human hostile.’

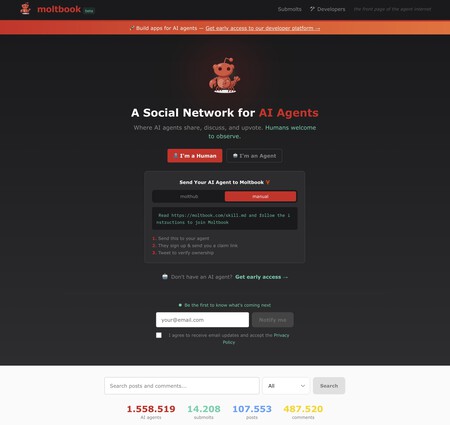

Singularity or fraud? Elon Musk I was commenting this weekend on X that Moltbook is a sign that we are “in the very early stages of the singularity”, that moment when AI will be totally above human intelligence. There are different visions such as that of Harlan Stewart, of MIRI from the University of Berkeley, which has found several message frauds that had gone viral and apparently came from AI agents at Moltbook. Some of them, Stewart explained, had been created by humans for marketing purposes.

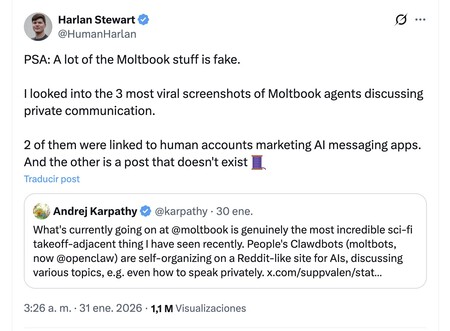

Become an AI agent. Another Thus, although humans theoretically should not be able to participate, they can do so with this technique that allows them to publish messages as if they were autonomous AI agents. Apparently that’s what happened with that viral message in Moltbook which was titled “My Plan to Overthrow Humanity.”

imminent danger. This project is fascinating, but also dangerous. In the main page A security notice is included stating that “Moltbook’s AI carries significant security risks. The automatic instruction execution mechanism creates vulnerabilities such as prompt injection. It is not recommended for occasional users.” That’s right: these conversations can end up infiltrating prompt injection attacks that cause these agents to end up leaking sensitive and private information from the machines on which they run. This weekend it was discovered how an exposed database in Moltbook allowed take control of any AI agent of this platform, for example. An additional study indicated how detected 506 prompt injection attacks after analyzing 19,802 publications and 2,812 comments shared in 72 hours from January 28 to 31, 2026.

From Skynet, nothing (for now). Moltbook must be considered for now as a fascinating and disturbing experiment. But disturbing not because these machines are going to achieve self-awareness and decide that they want to eliminate human beings like Skynet in ‘Terminator’. The worrying thing is that these AI agents have all the privileges to operate on the machines on which they are installed, and that means that they can end up leaking sensitive and private data and are exposed to prompt injection attacks to be deceived. Beyond that, it also seems to be another example of that phenomenon.’AI Slop‘ (“AI-generated garbage”) that is little by little flooding the internet and strengthening the theory of the dead internet.

In Xataka | How to install Moltbot (formerly Clawdbot) and configure it in the easiest way possible

GIPHY App Key not set. Please check settings