Apple celebrated its WWDC 2025one in which the Redesign of iOS was even above artificial intelligence implementations. At least, as far as speech is concerned. Liquid Glass It is the new interface shared among all Apple operating systems, starting from the design language we saw for the first time in Vision Pro.

iOS 26the operating system of iPhonehas been updated in your beta for developers. And a server has been testing it since yesterday. I’m going to tell you what I found in This first betaone with a lot of work ahead and that sets the foundations of a new era for Apple. One in which curves come.

Liquid Glass in iOS 26

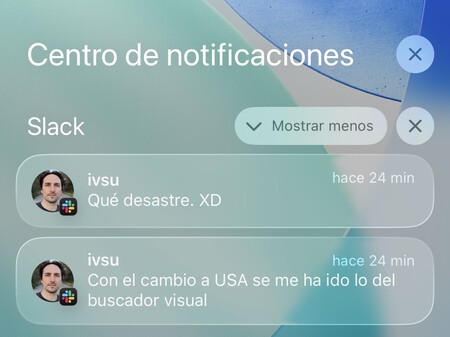

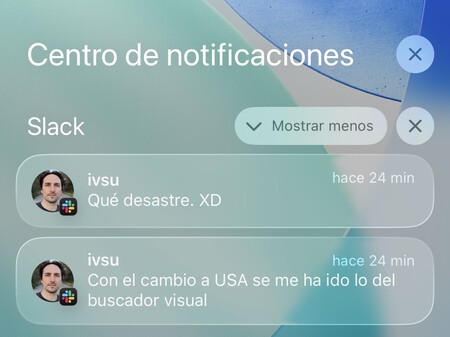

Blocking screen notifications are practically illegible, for the moment.

iOS 26 has completely changed the design we had in iOS 18and absolutely all the elements of the interface are starring transparencies. When it is more or less aesthetic I will not enter, it is subjective. What leaves no mistake is that … There is a serious problem with contrast.

It is a first version, but Apple has a challenge ahead with transparencies: readability

The elements of the blocking screen and notification screen, if we have a clear background (the one that comes by default), present important readability problems. It does not depend on having a more or less large panel, it is barely seen as soon as the notification lands in a darker element of the wallpaper.

If it falls to a darker area, the improvement visualization (something).

That the elements of the UI have the transparent edges do not help, that absolutely everything is transparent, does not help. This is the first beta for developers, so it is convenient to be cautious. The first Dark theme implementation in iOS was simply terrible. Now it works perfectly. Which We are seeing right now is the sketch on which Apple is going to workand these readability problems, at least on paper, should be resolved.

I am especially concerned with Glow that have the edges of some apps. This brightness is sought, but ends up achieving that apps with white background are perceived quite blurred and little defined. Apple will have to seek balance between respecting this new visual identity, and that everything is readable under any circumstance.

The redesign affects all native appswhich now have a dynamic bar at the bottom. Perhaps, too dynamic, to the point that the camera app seems completely broken when you open it, and you have to be you who intuits that, sliding on the photo icon, the rest of the options are displayed. Very little Apple, even for a first beta version.

The system is loaded with details, such as the remaining load time in settings (it does not appear on the lock screen, as on Android).

On the positive side, There are very careful elements: sickly refined animations, improvements on the blocking screen with the automatic adaptation of the clock to the main element and, although it is missing, a cohesion at the level of native apps and system that was not so deep before.

The animations are half cooking, there are broken elements in the interface, and it shows that everything is still quite green. It is not something that worries me in excess, it is the first beta for developers of the greatest design change in the history of the iPhone.

An AI that continues behind its rivals

Do not expect great changes in Apple Intelligencebecause there are no. Screen recognition through chatgpt does not work yet, the news to generate Genmojis in Image playground They are insignificant, and automatic translation does not work for the moment, it is frozen.

Yes I have been able to try the new call filter, one that comes to compete with Google’s and that is much more aggressive. Apple filters absolutely all unknown calls: Siri pauses them, ask who he is and what he wants, to show us later. Only there we will decide whether we want to take the phone or not. It is a solution that ends radically with spam, but that kills gunflows.

There is nothing of Google’s intelligent detection, just an aggressive filter that ends all the calls of numbers that we do not know. If we have any urgency or the person who calls us is special has a hurry, we can end up losing the call in the process.

The key in Apple’s AI is working locallywith its own model that does not need a constant cloud connection. Apple will open these Apple Intelligence tools to third parties so that developers can use them in their apps, always running in local models.

His second big point is silence. Apple wants AI to be there to help us in day to day, not to modify by system elements. It is a discreet implementation, although insufficient.

Nothing that Apple has presented is new or exclusive: Google and Samsung have leading the AI career in mobiles for more than two years. The translation of calls live, the call filters (although less aggressive), and functions with which Apple does not have the audio draft, navigation assistant or sharing screen with Gemini Live (the assistant sees the screen in real time and interacts by voice, not only text) in models such as the Samsung Galaxy S25they show that Apple is still far from the throne.

Apple continues to the defensive

Apple has decided to follow a strategy in which its AI controls the everyday, and the advanced is subcontracts to companies such as OpenAi. The changes that, at some point, They will arrive in Sirithey are drawn as something irrelevant at this point. We already know what Apple’s position is with AI, and makes some sense.

The problem is that it is not an AI that comes to win. And Apple seems to be clear that this race is no longer yours is worrying. The local approach is plausible, but not enough.

Regarding the change of design, my point in this first beta is to be cautious. If there is something that especially worries Apple is the care in its interfaces, and migrating all its platforms to a new design language is a process that will take time, errors and successes.

This is a beta that I do not recommend installing. Both for their problems when reading the elements of the interface and for their performance. In an iPhone 16 pro the mobile burns, laguea, and works very, very slow. Waiting for upcoming versions, especially to the public beta that will see the light within just a month, is a better idea.

Be that as it may, we have Apple’s future in front of the coming years. The company describes it as the biggest change at the visual level From iOS 7system that occurred in 2013. Visionos has marked the rhythm and visual identity of the entire Apple ecosystem. The question is whether they need someone else’s help to mark a new rhythm in AI, or if this approach is the one that will accompany us over the next few years.

Image | Xataka

GIPHY App Key not set. Please check settings