In 2019 to a young researcher called François Chollet It occurred to him to create a benchmark for AI. The idea was strange to say the least, because in 2019 there was practically nothing with which to test that benchmark. Chollet actually anticipated the futurebecause there were still three years before ChatGPT appeared and the AI fever began.

Later, more and more synthetic benchmarks would arrive to measure the performance of AIs, but ARC-AGI was a different benchmark. While in many other benchmarks the model’s memorization capacity is crucial, here the AI’s abstract thinking and generalization capacity was tested.

The problems proposed in ARC-AGI and its successor, ARC-AGI 2, consist largely of visual puzzles that are relatively easy to solve by humans, but which until now They were almost impossible for machines. In the last two years, however, we have seen how AI models were improving in abstract understanding and generalization, and little by little they solved more and more ARC-AGI puzzles. The problem?

They spent a fortune to do it.

And that’s where GPT-5.2 comes in.

AI can solve almost everything. The question is how much does it cost to do it?

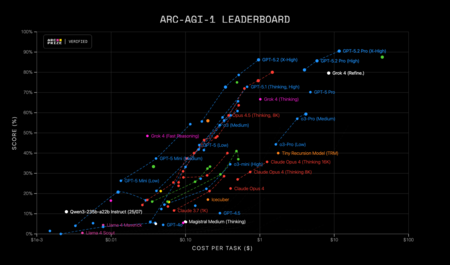

Last year o3-preview managed to solve 87% of ARC-AGI 1. The milestone was so striking that even those responsible for the benchmark They published an announcement about it. To achieve this, yes, o3-preview executed 100 tasks with a total cost of $456,000: each one cost $4,560.

Source: ARC-AGI Prize

GPT-5.2, the latest version of OpenAI’s foundational AI model, was released yesterday. Its performance in other benchmarks was exceptional, but what really stands out is how it performed in ARC-AGI 1. Not because it managed to solve 90.5% of the problems with GPT-5.2 Pro (X-High), no, but because of how much each task cost.

The figure: $11.65 per task. 390 times less that a year ago.

In fact an even cheaper version, GPT-5.2 (X-High) achieved 86.2% at a cost of only $0.96 per task. Hallucinatory.

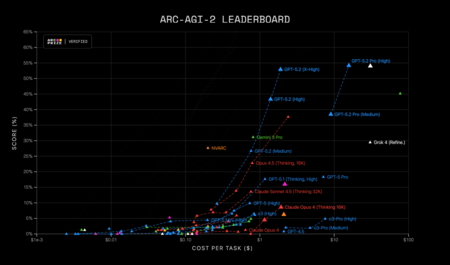

ARC-AGI 2 remains a challenge for most models, but GPT-5.2 has taken another leap of exceptional quality. Source: ARC-AGI Prize.

Chollet and his team knew that the AI would end up passing their ARC-AGI test sooner or later, so in March 2025 They published ARC-AGI 2, the second version of their benchmark, to make it even more difficult for machines. This test is still a real challenge for most models, which until now had only solved 38% of the problems in the best of cases (Claude Opus 4.5).

GPT-5.2 has managed to resolve almost 55%. It’s a colossal leap.

And again, at a truly astonishing cost of $15.72 per task. The trend is clear: AI is not only getting better, but it is getting cheaper and cheaper.

That’s good news for everyone, because it balances the already clear perception that scaling doesn’t work as much as it did in the past. The jumps in performance are not so striking – although these tests with ARC-AGI dismantle that argument – but the jumps in cost are.

The AI race seems to have reached a turning point. The real question is not whether AI will solve a problem, but how much it will cost to solve it. And the evolution of GPT-5.2 seems to clearly demonstrate something crucial: that AI is increasingly solving more things at a lower price.

That is also something critical for an OpenAI that is in a delicate economic situation. Now that we’re more of a plateau in terms of performance gains, becoming cheaper and more efficient is key for the future of the company. And it seems that GPT-5.2, as well as a response to Gemini 3 Pro, is a clear step in that direction.

In Xataka | There is a race in which Anthropic is winning over OpenAI: that of being profitable

GIPHY App Key not set. Please check settings