Now, in the race to lead the development of artificial intelligence, something unusual has just happened. Gemini 3 FlashGoogle’s new model, has surpassed GPT-5.2 Extra High, the higher-reasoning variant of OpenAI, in several performance tests. And that forces us to rethink some of the rules that we took for granted.

A fast model that also reasons. Google’s new model comes with a very specific promise: to demonstrate that “speed and scalability do not have to come at the expense of intelligence.” Although it has been designed with efficiency in mind, both in cost and speed, Google insists that Gemini 3 Flash also excels at reasoning tasks.

According to the company, the model can adjust your thinking ability. It is able to “think” for longer when the use case requires it, but it also uses 30% fewer tokens on average than Gemini 2.5 Promeasured with typical traffic, to complete a wide variety of tasks with high precision and without penalizing response times.

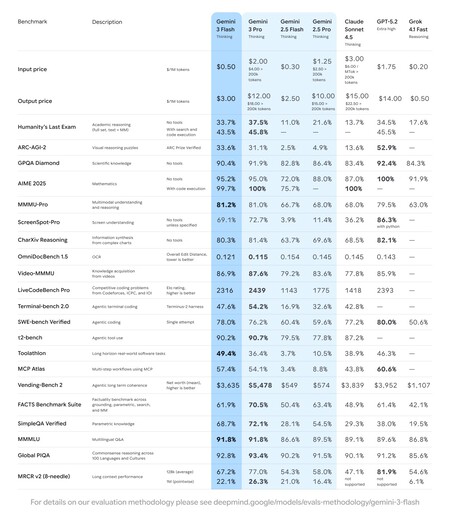

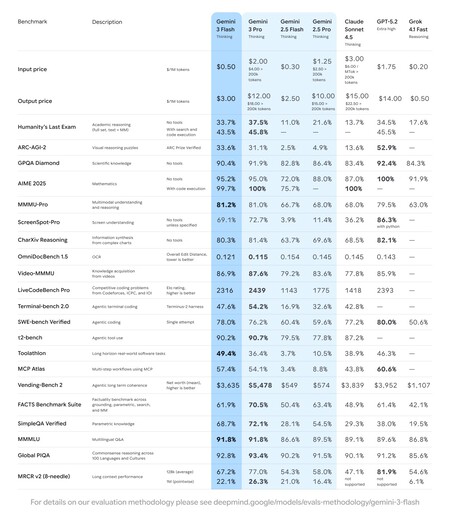

The truth is in the benchmarks. Are the benchmarks perfect? No. But they are still one of the most useful tools we have for comparing AI models.confront them against each other and detect in which scenarios they perform better or worse. And in this area, Gemini 3 Flash comes out well.

In SimpleQA Verifieda test that measures reliability in knowledge questions, Gemini 3 Flash achieves 68.7% compared to 38.0% for GPT-5.2 Extra High. In multimodal reasoning, within MMMU-Pro, Google’s model scores 81.2% compared to OpenAI’s 79.5%. In Video-MMMU, Flash achieves 86.9% compared to 85.9% for GPT-5.2 Extra High.

If we look at multilingual and cultural capabilities, Flash is again ahead, with 91.8% compared to 89.6% for GPT-5.2 Extra High. In Global PIQA, focused on common sense in 100 languages, the difference remains: 92.8% for Flash versus 91.2% for the OpenAI model. Everything indicates that Gemini 3 Flash is specially optimized to capture nuances outside of English and reason more fluently in global contexts.

He also excels in the use of tools and agents. In Toolathlon, Flash scores 49.4% compared to GPT-5.2 Extra High’s 46.3%. In the FACTS Benchmark Suite, the difference is tighter, but still in favor of Google: 61.9% versus 61.4%. In long-term tool execution tasks, Flash appears to show greater consistency.

But he is not the king of pure reasoning. Now, it is worth looking at the complete photo. Although Gemini 3 Flash outperforms the best OpenAI model in several tests, if you are looking for “pure” reasoning, the balance changes. In the most demanding tests in this area, GPT-5.2 Extra High continues to set the benchmark.

OpenAI’s model leads ARC-AGI-2, focused on visual puzzles, with 52.9% compared to Flash’s 33.6%. In AIME 2025, with code execution, it reaches 100% compared to 99.7%. And in SWE-bench Verified, aimed at software engineering, it obtains 80.0% compared to 78.0% for Gemini 3 Flash.

What exactly is GPT-5.2 Extra High. Throughout the article the name GPT-5.2 Extra High appears several times, and it is normal to wonder if it is something new or little known. In reality, it is not a model that is usually mentioned to the general public.

Google uses this designation in its comparison table to refer to the maximum level of reasoning available in the OpenAI API for GPT-5.2 Thinking and Pro. In the official OpenAI documentation it is identified as “xhigh”.

Where you can use Gemini 3 Flash. Access to Gemini 3 Flash is not country dependent. If you have access to the Gemini appyou are already using this model, which has become the default option. It is also reaching developers through the API, AI Studio and Vertex AI. In the United States, the deployment goes a step further, as the Gemini 3 Flash has become the default model of the AI Mode of the Google search engine.

The price of using Gemini 3 Flash. For those who want to integrate Gemini 3 Flash into their applications, the model costs $0.50 per million input tokens and $3 per million output tokens. This is a slight increase over Gemini Flash 2.5, which was $0.30 per million tokens in and $2.50 per million tokens out.

An increasingly tight race. Gone are the days when Google tried to confront ChatGPT with Bard, or when OpenAI seemed to be years ahead of the rest. Today, the distances between the big players in AI have been drastically reduced. The competition is more direct, more technical and, above all, much closer.

Images | Google

GIPHY App Key not set. Please check settings