Every time Chatgpt generates a word, that costs money. But the price of that word generated has not stopped falling since the launch of that model, and the same goes for its rivals. Today we have AI models that are not only more powerful, but also They are cheaper than everand the funny thing is that we are paying more and more to use them. What is happening?

Tokens. OpenAI define Tokens as “common character sequences found in a text set.” That “basic unit” of information It is what these models use to understand what we are saying and then process those texts to answer. Every time we use ChatgPT we have on the one hand the request with the text we introduce (input tokens) and on the other the text generated by the chatbot (output tokens).

|

Price per million tokens (dollars) |

Entrance |

Exit |

|---|---|---|

|

GPT-5 |

1.10 |

10 |

|

GPT-4O |

2.5 |

10 |

|

O1 |

15 |

60 |

|

Gemini 2.5 Pro (<200k tokens) |

1.25 |

10 |

|

Gemini 2.5 Pro (> 200ktokens) |

2.5 |

15 |

|

Claude Opus 4.1 |

15 |

75 |

|

Claude sonnet 4 (<200k tokens) |

3 |

15 |

|

Claude Sonnet 4 (> 200k Tokens) |

6 |

22.5 |

Price per million tokens. And when we use an AI model, the price of using it is precisely measured how much it costs every million input tokens and every million output tokens. The more powerful a model is, the higher the price of those tokens, and to get an idea these are the prices of some current models. The prices of the output tokens (those generated by the machines) are remarkably higher than those of entry: it costs much more to generate text than “to receive and understand it.”

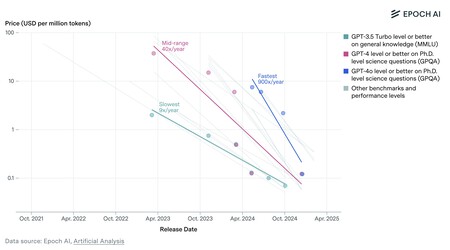

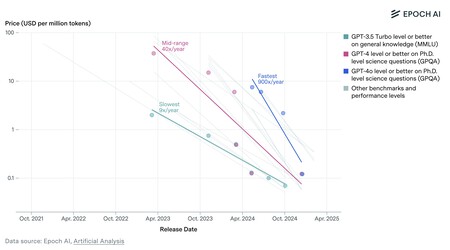

AI models are getting better and getting cheaper. At least, in terms of cost per million tokens. Source: Epoch AI.

But prices have not stopped down. Those prices per million input or output tokens, however, have fallen remarkably since ChatgPT (at that time based on GPT-3.5) appeared on the scene. An Epoch AI study March 2025 revealed how the price of inference – generating text, as they do chatgpt, gemini or Claude – has not stopped falling. In some cases the models are smaller and efficient, and also the hardware is also now more profitable, which favors that price drop.

And we still pay more and more for using the AI. However, developers who use these AI models to program are realizing that their invoices are increasingly high. These types of professionals have been the ones who have taken advantage of the advantages of this technology, but in doing so they have realized that contradiction. Actually the explanation is simple.

Reason spends many tokens. The problem is that reasoning models consume many tokens. This type of technology improves the precision of the answers, but to achieve it the models do not stop “thinking” and generating different theories and then analyzing them and keeping the solution they consider more likely or better. The models that “do not think” and generate text “only once” consume few tokens, but those who “reason” multiply that cost remarkably.

The Vibe Coding comes out expensive. The most expensive example of those high costs of AI have it in the “Vibe Coding” platforms. With them it is possible to program almost without knowing how to program, but these tools make an extensive use of the AI models, and there the consumption of tokens (especially those of exit, which are the most expensive) shoot. Several companies in this segment, such as Windsurf or Cursorthey have realized how difficult it is to make money with AIand there are also various users They are warning of those Shot costs In Reddit, for example.

And AI agents promise to be very expensive. IA agents are expected to be able to do many things for usbut these systems will be expensive because they will also consume many tokens to understand, “reason” and reach the desired solution.

Solution: Use you that do not think so much. Faced with those IAS that “reason” and consume many costs, the alternative is clear: not to resort to the reasoning models and instead opt for models that “do not reason” to reduce costs. These models are much cheaper to use and can be useful for many scenarios. Fortunately, models of efficient and increasingly cheap that reason are also appeared: Deepseek R1 is a good proof of this.

The famous router may not be a bad idea. When OpenAI GPT-5 launched He did it with his famous “router” or “router” that analyzed the request and decided on its own what variant of the model (more or less powerful) had to answer the question. As we saw, That router tends to choose to use the “cheap” modelbut that is not a bad idea. Neither for OpenAI (who costs much less processing the answer) nor for users (who also consume less resources and have more free fee for other questions that perhaps need “reasoning”).

Image | Levart Photography | IGAL Ness

In Xataka | AI agents are promising. But as in Tesla’s FSD, you better not take your hands from the steering wheel

GIPHY App Key not set. Please check settings