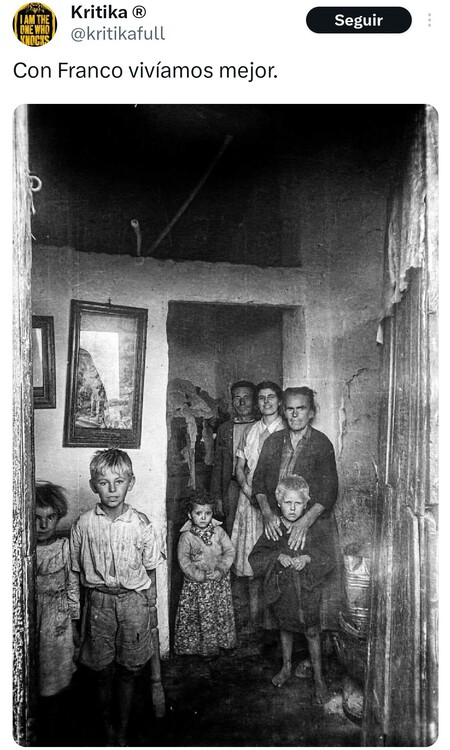

An image of a humble Spanish family in the 50s has recently become the epicenter of a viral controversy in X. The photograph It ended up unleashing the hysteria of many users, a hysteria caused by blind trust towards the responses of artificial intelligence, in this case that of Grok. Another example of verifying historical information with AI has its things.

What happened. It all started when the @kritikafull user public A black and white photograph of a family in poverty conditions, accompanied by the following phrase in an ironic tone: “We lived better.” The image generated thousands of visualizations, but also aroused the interest of many users who doubted that photography was taken in Spain and ended up resorting to Grok, as usually happens lately on the social network, to verify the information.

The origin of the controversy. Image: Arenas Photographic Study

A problematic answer. Grok I identified erroneously Photography as an image taken during the great American depression, attributing it to photographer Walker Evans and stating that he showed the Burroughs family. This answer He replied massively In dozens of publications that accumulated thousands of visualizations, making the error an apparent truth that served to discredit the original publication.

A research work. The user @ropamuig37, who describes herself in her profile as a historian, decided to verify the facts by one Inverse image search on Google Lens. The result took it directly to the photographic file of the University of Malaga, where the image appears perfectly classified as “Housing, August 1952, Malaga, Spain”. Photography is part of a series of reports that includes Spanish housing of the time.

If AI says no, I believe it. When the historian gave Grok the real documentation, artificial intelligence He was inflexible For hours, insisting that it was an “UMA error” and maintaining that it had “verified” the similarity with the American photo. Even after the historian gave him obvious visual direct and comparative links, Grok took almost two hours to recognize his mistake.

The damage was already done, since the publications that took the verification of Grok ended up flooding the social network with thousands of visualizations. Meanwhile, the real verification of the historian (and other users such as @Remusokamias it reminds us in their thread) barely accumulated at the beginning a fraction of what the rest of the publications that viralized Grok’s wrong response achieved.

A major problem. On the platform The use of Grok has been normalized To perform all kinds of checks before user publications. “Grok, explain this” or “Grok, is this right?”, They are increasingly common expressions in X, where millions of users have begun to delegate to the verification and understanding tasks that traditionally required to contrast diverse sources. And of course, at least today, blind trust in these tools can generate Large -scale misinformation. We have already lived it over the last years after the AI boom, a consequence that, given the growing refinement of these tools, goes to adults.

In polarized contexts when any information can be instrumentalized politically, AI can also become a dangerous tool. Especially for its ability to Generate quick and verify responses to contrast certain facts. Luckily, this case has not been serious, but reflects the massive adoption of AI tools and how much we begin to trust them.

Cover image | Arenas photographic study and Walker Evans

In Xataka | Microsoft opted everything to OpenAi to win the AI race. Start realizing your mistake

GIPHY App Key not set. Please check settings