Over the last few years, artificial intelligence has crept into our routines as a practical tool: generate images, summarize, analyze, program. But in recent times it is crossing a more demanding frontier, that of systems that make decisions with physical consequences in the real world. And that also includes space. NASA JPL just announced that the Perseverance rover has completed the first drives on another world whose route was planned by AI. In terms of planetary exploration, we are not talking about a great leap in distance, but about something more delicate: proving that a technology designed to interpret information and propose actions can begin to be integrated, with supervision, into the way in which other worlds are explored.

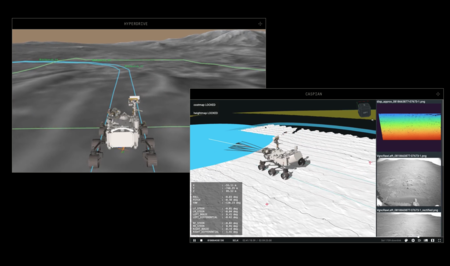

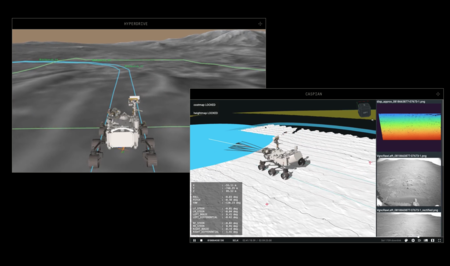

What exactly did the AI do. The test materialized in two drives carried out on December 8 and 10, 2025, both inside the crater Jezero. In those two days, the team incorporated AI models with visual capacity for a very specific task: proposing waypointsthat is, the intermediate locations on which the driving plan is then built and sent to the rover. This type of planning is normally done manually by specialists who analyze images and data of the terrain. On this occasion, AI generated these waypoints so that Perseverance could safely navigate a complex area, under the leadership of the rover’s own operations center at JPL and in collaboration with Anthropic.

A basic limitation. Mars is far away, and you can’t drive a rover like a remote-controlled car. JPL itself remembers that the red planet is, on average, about 225 million kilometers from Earth, a distance that generates delays in communication and makes real-time control unfeasible. For this reason, the missions operate with a different logic: the terrain is analyzed, routes are drawn in sections and instructions are sent through the Deep Space Network. The rover executes them and the result is confirmed with a delay. It is a well-proven workflow, but it is also slow, especially when the goal is to advance through complex areas without putting the vehicle at risk.

The milestone figures. JPL details that, in the first demonstration on December 8, 2025, Perseverance advanced about 210 meters. In the second, on December 10, he traveled around 246 meters. In total, just over four hundred meters in two days. It is not an epic feat nor does it pretend to be. What is relevant is that these routes were based on a different scheme than usual: the planning was built from the aforementioned waypoints and the rover then executed the plan on terrain that requires precision because it does not forgive mistakes.

A demonstration that AI continues to gain ground. “This demonstration shows how far our capabilities have advanced and expands how we will explore other worlds,” said NASA Administrator Jared Isaacman. And he finished with an idea that serves as an editorial guide for the entire experiment: “Autonomous technologies like this can help missions operate more efficiently, respond to challenging terrain, and increase scientific performance as distance from Earth increases.” For now, the demo is limited, but it’s hard not to read it as a warning. Autonomy is no longer discussed only in laboratories, it is also being tested on Mars.

In context. We are not talking about any AI. Claude, Anthropic models, have been gaining ground as a tool for programming tasks for some time, becoming a reference option, even threatening ChatGPT. And that reputation has not stayed in the developer community: according to Mark Gurman (Bloomberg), Apple would be beginning to integrate it in a structured way into its AI strategy for Xcode; and, according to Insider, Meta has incorporated Claude into “Devmate”, an internal debugging-oriented tool.

Images | NASA | Anthropic

In Xataka | Anthropic has rewritten his 25,000-word “Constitution” for Claude. It is the manual for how AI should behave

GIPHY App Key not set. Please check settings