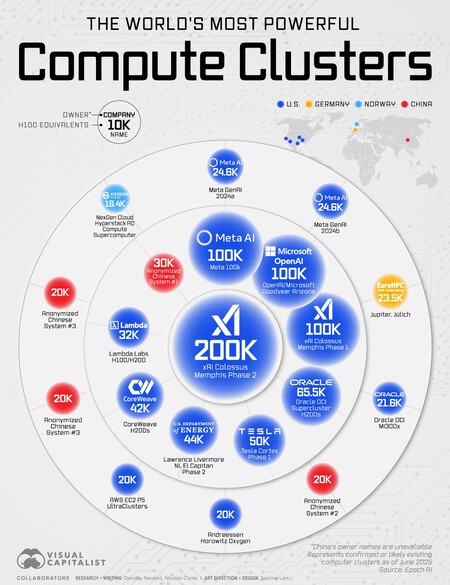

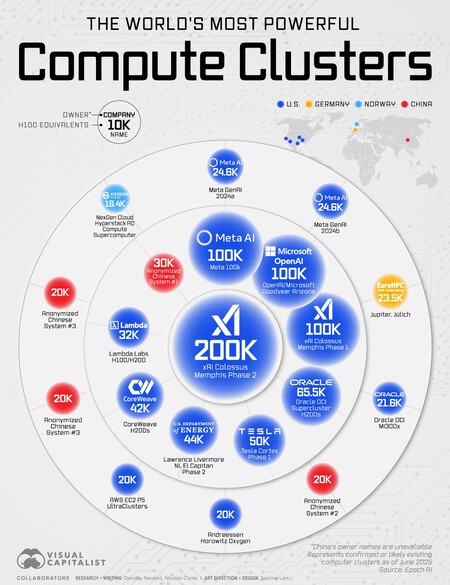

The development of AI has promoted a New ‘Armamentistic’ career globally. It is not sought to dominate another territory, but to get the more computing power, the better. The main technology companies are deploying centers from Data around the world With a goal in mind: train the artificial intelligence. There are data centers that are authentic burged, and in this graph we can see the most powerful data clusters in the world with an outstanding protagonist:

Elon Musk.

Cluster. Before entering numbers, a nuance. When we talk about calculation power, we can talk about a computer cluster or a supercomputer. The latter is a system Extremely powerful which can be built with processors specially designed to reach extreme calculation powers or, most commonly common, from thousands of high performance servers. They are used for scientific simulations and tasks that require a huge calculation process, and its cost is brutal.

On the other hand, We have the “affordable” version of a supercomputer: the computer cluster. It is a series of interconnected work stations that work in parallel solving problems. It is similar to a supercomputer, but the advantage is that It is a more flexible system Because, as you need more teams, you can expand the cluster. In addition, the components are more standard, which also allows the cost to be lower. But well, it is a concept that has blurred in recent years.

The 100,000 club. That said, let’s go back to the graph elaborated by Visual Capitalist With the data of EPOCH AI. In it, we can see the most powerful clusters currently, but with some trap: they are both planned and operational. X, Elon Musk’s company, lit the XAI Colossus Memphis Phase 1 last year, a huge data center with 100,000 NVIDIA H100 GPU With the aim of training ‘Grok’, his AI model. It was something that He even surprised Jensen HuangCEO of Nvidia.

It is a computing monster with an enormous calculation power, but the figure is expected to increase up to 200,000 GPU. We will see later the energy consequences of this. Following Musk’s company, we have Meta by stating that they have a cluster “greater than 100,000 GPU H100“For her model ‘calls 4’. Then there are those who maintain something else the mystery. For example, Microsoft with its cluster For Azure, Copilot and the OpenAi AI estimated they have 100,000 GPU between H100 and H200,

Two worlds. Out of that 100,000 club we have Oracle With its 65,536 NVIDIA H200, another Musk company -you with the Cortex Phase 1 and its 50,000 GPU, and the United States Department of Energy with The Captainhe Most powerful supercomputer in the world. Officers or estimated, what is clear with this graph is that there is a country that has taken the calculation of AI seriously: United States.

They are the ones that seem to push stronger with their data centers in the United States (of the 10 clusters, the first nine are in the US and the last in China) and are not only building inside their borders: Also outside. An example is the finish plan for Build data centers in Spain or the one who has practically Manhattan’s size.

European expansion. In the graph, we can see two European clusters. On the one hand, The Jupiter from the Jülich Supercomputing Center in Germany with its confirmed GPUs. On the other hand, the Nexgen In Norway, with about 16,300 GPUs. Europe has undertaken several financing initiatives with the objective of Promote your competitiveness Thanks to programs such as Genai4eu and its budget of 700 million euros between 2024 and 2026.

The objective is to build large data centers and, for the call of 2025, 76 proposals were presented in 16 different countries. Now, that development of the European AI must be aligned with Ai actthe agreement in force since February of this 2025 that ensures transparency and an ethical AI.

Number vs. efficiency in China. Who has put the batteries in AI, beyond US companies, is China. Following one Road map very different from the westernChina is focusing on having (supposedly) less GPU working, operating with greater efficiency, much lower costs than those of American companies and with equivalent results. Deepseek or the most recent Kimi They are two samples of it.

Nvidia rubs her hands. And of all this battle for AI, there is a clear winner: Nvidia. As much as it may be, and beyond who has more or less GPU to do the job, the clear winner is Nvidia. In China it is not so clear due to the commercial veto, but the main world data centers use Nvidia’s architecture with their GRAPHS H100 Y H200. And that if we talk about “normal” cards for AI, since they have the B200 with four times the performance of H100.

In fact, the company seems so focused on that AI career that would have neglected what led by AMD for years: Your players cards.

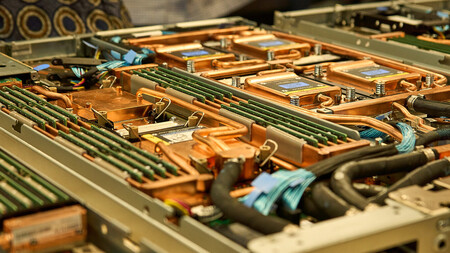

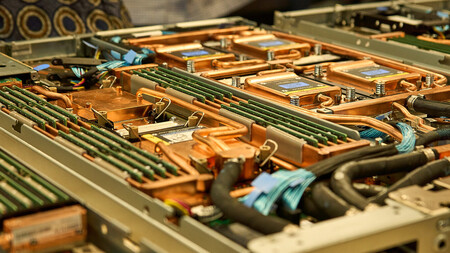

Those are servers of Lenovo data centers. Companies seek to reduce the footprint reusing hot water after dissipation to, for example, fill pools or showers. Image | Xataka

The planet, not so much. And the consequence of that expansion of the AI is that data centers not only need huge energy amounts To function, also water to dissipate the heat of the equipment. There is an important absent in the graph, Google, which also operates its data centers for AI and that, together with others as goal or Microsoftneeds nuclear centrals to feed its facilities.

Consumption is so exaggerated that renewables are insufficient during demand peaks, Using fossil fuels like coal or gas ( esteem That, the 200,000 Colossus GPU consume 300 MW, enough to feed 300,000 homes) and, as we said, the Water use has become discussion material in the candidate territories to house new data centers. So much dissipation needs China to already Building at the bottom of the ocean.

In Xataka | China wants to become AI world engine. And her plan is to depend only on herself

GIPHY App Key not set. Please check settings